Recently, on one of the user groups, one of my colleague posted a question about loading

one-to-many collections. His requirement was quite unique compared to the stock standard

one-to-many collections. They were using

Hibernate as the ORM tool.

The Requirement:

I will try and explain the requirement using an example.

- Let's say there are entities that need to store a set of attributes.

- Attribute's are nothing but (key, value) pairs.

- Attributes could be associated with any class that needs to have attributes.

- For example, Image can have attributes like what is its dimension, what is its resolution etc.

- While Video might need to save information like, what is its length and format.

We could argue here that, both

Image and

Video are

Asset's and

Asset can have

Attribute's. However, the point I am trying to make here is, there could be a totally unrelated class that needs to save attributes, for example we could have

Attribute's associated with a

Car class. There is really nothing common between

Image and a

Car.

Hence, for the scope of this post we will assume that attributes could be associated with almost any entity and these entities are not related to each other in any way.

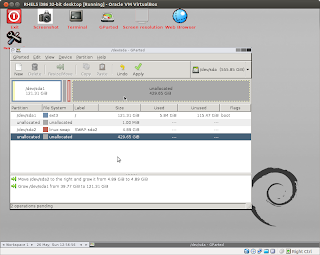

So far so good, the unique part was how they saved the parent entity reference. Let's have a look at some sample data:

|

| Showing how information will be saved using the ATTRIBUTE_IDENTIFIER column |

Notice the

ATTRIBUTE_IDENTIFIER column value?

Yes, that's the most interesting part. To identify which attribute is associated with which entity the reference is stored in the following format:

<Full Class Name of Entity>:<Entity ID>

Weird? Yes, Weird but very interesting!

If we were to design the system from scratch then, obviously we would map the table a little differently but more often than not, we really have to live with what we have in hand. So given the fact that we cannot alter the schema or store the information in any different way, challenge was to map the Attribute class with

Image and

Video entities so that we can achieve the desired result?

So much to clarify the requirement, phew!

How do they do it!

I had to look around a bit and try out a few things before I could find the solution for this requirement.

Short Story:

Use the custom SQL query to load the

one-to-many collection entity.

Long Story:

Without wasting any more time, let's look at the code. The

Image and Video entity classes would look as follows

They are mostly stock standard classes but a few things to notice:

- They implement a convenience interface called AttributeProvider. This interface is purely for convenience reasons its actually not really required (The code for AttributeProvider class is also shown above).

- Both Image and Video class have a collection of Attribute entities (i.e. they both have a one-to-many relationship with Attribute entity)

- The method addAttribute adds the Attribute instance to the collection and sets the back reference to the parent entity in the Attribute class. We will see how Attribute class handles this back reference in the next section.

The

Attribute class would look as follows:

There are a few things worth noticing about this class:

- It does not map the parent entity (i.e. Image or Video), it declares a reference to AttributeProvider interface but marks it as @Transient. This instance is only needed when we generate the value of attributeIdentifier for the first time while saving the Attribute via cascading effect.

- It has a property called attributeIdentifier this will hold the value that will uniquely identify the entity associated with this Attribute.

- The getter for attributeIdentifier implements the logic needed to generate the identifier.

- It first check if the property attributeIdentifier is not null, if so then, return that value

- Else check if AttributeProvider is not null (i.e. the transient object), if so then, construct the attributeIdentifier in the <Full Class Name of Entity>:<Entity ID> format.

- In all other cases return null

The entities are done, lets look at the mapping hbm.xml file for these entities

The mapping file for

Image would look something like this:

Note that:

- Everything else looks extremely common, only part that might be a little unique is the <loader /> tag

- We are specifying a query-ref called loadImageAttributes in the loader tag. This informs Hibernate that, we want to load this one-to-many collection use the query identified by name "loadImageAttributes"

- The key column specified in the mapping is called "ENTITY_ID". Remember this column name, its going to play an important role in the next part.

The mapping file for

Video:

Here the name of the loader

query-ref is

"loadVideoAttributes" and that is the only difference between the two mappings.

The mapping for

Attribute:

Wow! this one has no mention of any of the parent entities, it only maps its basic properties without any relations. Moreover, we didn't notice the mapping for the column

"ENTITY_ID" (remember this column was mapped as the key column for the

one-to-many association between

Image-Attribute and Video-Attribute relationships).

How will the relationship between Image-Attribute and Video-Attribute work without this column?

The real magic happens in the loader queries that we are about to write. The loader query for

"loadImageAttributes":

Few interesting things about this query:

- Role attribute of <load-collection /> tag needs to point to the collection which will be loaded using this query. In our example we want to load the Image.attributes collection.

- In addition to the other columns in the select clause we added another derived column called ENTITY_ID.

- This column is the same column that we used while mapping the one-to-many association between Image and Attribute.

- This column value is derived by removing the first 34 characters from the ATTRIBUTE_IDENTIFIER column

- Why did we remove 34 characters? How did we reach to this number?

- Let's recollect how the Attribute is stored. The ATTRIBUTE_IDENTIFIER column will have the value like com.gitshah.hibernate.test.Image:1.

- To map it to an Image we need the Image ID. The Image ID is stored after 34 characters (i.e. after "com.gitshah.hibernate.test.Image:" whose length is 34 characters) in the ATTRIBUTE_IDENTIFIER column

- Hence, to get the entity ID for Image entity we strip off first 34 characters from the value stored in ATTRIBUTE_IDENTIFIER column.

- The where condition constructs the ATTRIBUTE_IDENTIFIER value using the formula <Full Class Name of Entity>:<Entity ID>

- We only know the Full Class Name of the Entity (in this example com.gitshah.hibernate.test.Image) and ID of each Image instance would differ, because of this, we cannot construct the value of ATTRIBUTE_IDENTIFIER completely.

- We let Hibernate fill in the ID value of the Image for us at the run time using a named parameter :imageId.

- At run time when Hibernate needs to load Attribute's for Image with ID=9, it will automatically bind the named parameter :imageId to the value 9.

We are almost done. Let's query look at the

"loadVideoAttributes" query.

It looks almost exactly like the previous query, on change is all references of

Image have been replaced by

Video.

That's it! We are all set to roll. Lets test this out.

If we run the above code we would see the following queries.

As expected the information is saved correctly.

Next test will try to fetch the

Image and Video and print their attributes

This test simply loads all the

AttributeProvider's and prints the

attributes associated with them. If we run the above test we should see an output similar to this:

That's all folks! We have achieved the desired result.

PS: I tried doing this with

@Loader Annotation but looks like there is a bug in

Hibernate because of which it throws an

NullPointerException. But the fact remains, that something as unique as this requirement was possible using Hibernate without too much trouble is totally AWESOME!

+1 for Hibernate!